2024: So much for “Exponential Growth” in AI

Here’s the lessons we should all learn from the AI bubble just before it pops:

- Big tech doesn’t give a crap about the usefulness of their products, only their perceived valuations from investors.

- They are needlessly copying each others work, trying to make the “Next Big AI Breakthrough” so they can take over the market.

- The general population doesn’t give a shit about AI. This is all a 0.1% problem.

1. Big tech doesn’t give a crap about the usefulness of their products, only their perceived valuations from investors.

Everybody hates what Meta has done to Facebook and Instagram. Everybody hates what Google has done to google search. Everybody hates how finding accurate stuff online has become damn near impossible due to AI bloat, sponsored ad prominence bloat, and “Suggested for you” bloat.

Why is there so much bloat on prominent pages online? It exist not to make the online experience great for users, it exist so that corporate investors will invest further into their company, so the company can invest more in bigger and bigger AI models in hopes of getting that “golden ticket” of market dominance.

In climate science, we would call it a “feedback loop”, a situation made increasingly worse with any attempt to fix it.

2. They are needlessly copying each others work, trying to make the “Next Big AI Breakthrough” so they can take over the market.

Besides the now poorly named OpenAI, we got Microsoft, Google, Amazon, Meta, Anthropic, and Nvidia (Yes, Nvidia too,) are all building huge LLMs using the same open sourced data (basically scraping the entire internet) on the belief that these new models will improve EXPONENTIALLY over the last ones they built. Billions of dollars are being invested on these exponential growth dreams, and investors are eating it up.

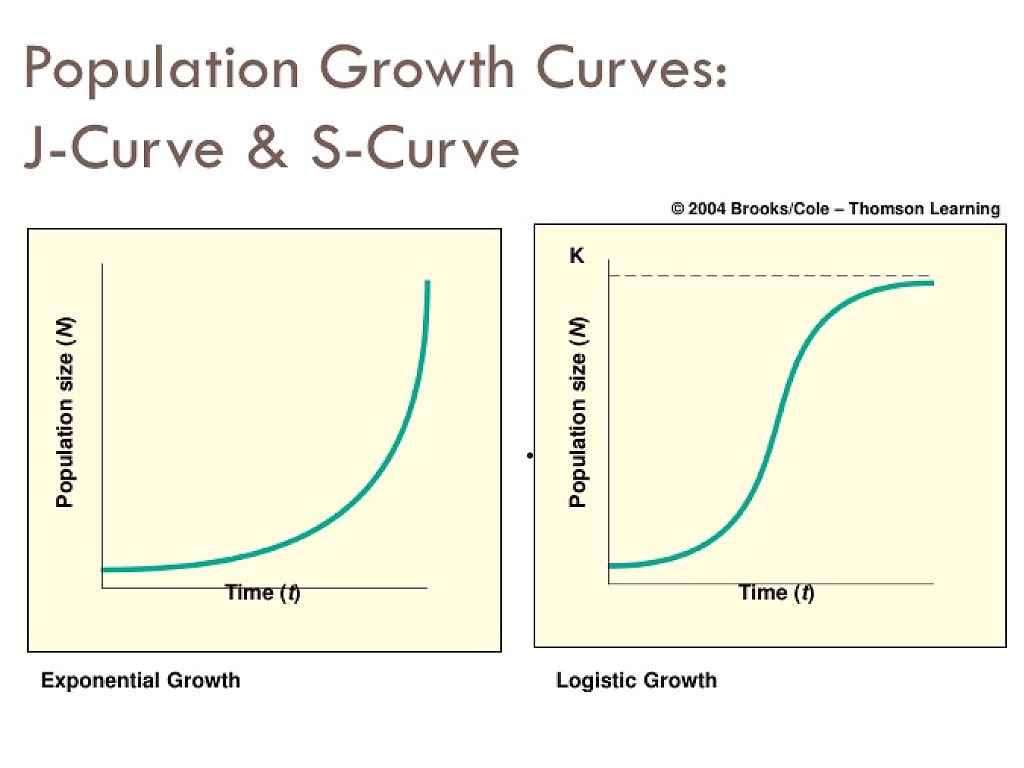

The problem is that evidence of LLM improvemets is pointing to a classic S curve. The famous “huge” improvement we saw from GPT-3.5 to GPT-4.0 was likely at the middle of the S where growth is at the maximum. New models don’t have enough good data to improve much more than it already is and the improvement to the models is likely going to slide logarithmic and instead of exponential. Several studies have suggested the S curve is more likely than the exponential, but S curve growth doesn’t get you VC funding. So all these billions of dollars and excessive energy and cooling water to make these bigger models is likely a waste.

And now the news has broken from several news outlets. Reuters is the only one I could find without a huge paywall.:

After the release of the viral ChatGPT chatbot two years ago, technology companies, whose valuations have benefited greatly from the AI boom, have publicly maintained that “scaling up” current models through adding more data and computing power will consistently lead to improved AI models.

But now, some of the most prominent AI scientists are speaking out on the limitations of this “bigger is better” philosophy.

Ilya Sutskever, co-founder of AI labs Safe Superintelligence (SSI) and OpenAI, told Reuters recently that results from scaling up pre-training – the phase of training an AI model that use s a vast amount of unlabeled data to understand language patterns and structures – have plateaued.

“The 2010s were the age of scaling, now we’re back in the age of wonder and discovery once again. Everyone is looking for the next thing,” Sutskever said. “Scaling the right thing matters more now than ever.”

Behind the scenes, researchers at major AI labs have been running into delays and disappointing outcomes in the race to release a large language model that outperforms OpenAI’s GPT-4 model, which is nearly two years old, according to three sources familiar with private matters.

They’re abandoning the expensive “Take everything off the internet and feed it into the machine” approach for the “Let’s find some programming tricks to make it smarter” approach.

As an experienced computer scientist, I applaud the change of focus in development, but no one is pointing out the elephant in the room: Dreams of Exponential Growth that will change the world are effectively DEAD. As word about this spread the great AI bubble burst is likely to happen very quickly.

Note: This stuff just came out in the last couple of days, and there is a lot to talk about: The dreams of exponential growth, the ability to “save the world” from climate change, etc. I don’t have the time to focus on this at this time, this is a follow up to my 2023: The Rise and Fall of AI (that took out Social Media with it) post from last year.

3. The general population doesn’t give a shit about AI. This is all a 0.1% problem.

This year Apple announce the iPhone 16 with enhanced AI capabilities. It was the worst selling iPhone release in years.

Meanwhile Microsoft has been pushing the Copilot AI PCs for Windows 11, and they’re also not that popular. Not all windows software can work on Arm chips, and gaming is really weak. It seems all the AI hype from 2023 has not lasted through 2024.

Or it has to do with the new “Recall” feature that takes screen shots of your computer every 5 seconds, that EVERYBODY thinks is an huge invasion of privacy, and Microsoft seems to be surprised at this.

Exchange on Tumblr from tech workers

Found this exchange talking about worker life in one of these big AI companies.

pyrrhiccomedy 🔵🔵 Follow Sep 23

So like I said, I work in the tech industry, and it’s been kind of fascinating watching whole new taboos develop at work around this genAI stuff. All we do is talk about genAI, everything is genAI now, “we have to win the AI race,” blah blah blah, but nobody asks – you can’t ask –

What’s it for?

What’s it for?

Why would anyone want this?

I sit in so many meetings and listen to genuinely very intelligent people talk until steam is rising off their skulls about genAI, and wonder how fast I’d get fired if I asked: do real people actually want this product, or are the only people excited about this technology the shareholders who want to see lines go up?

Like you realize this is a bubble, right, guys? Because nobody actually needs this? Because it’s not actually very good? Normal people are excited by the novelty of it, and finance bro capitalists are wetting their shorts about it because they want to get rich quick off of the Next Big Thing In Tech, but the novelty will wear off and the bros will move on to something else and we’ll just be left with billions and billions of dollars invested in technology that nobody wants.

And I don’t say it, because I need my job. And I wonder how many other people sitting at the same table, in the same meeting, are also not saying it, because they need their jobs.

idk man it’s just become a really weird environment.

shutframe Follow Oct 3

Like, I remember reading an article and one of the questions the author posed and that’s repeated here stuck with me, namely: what is it for? If this is a trillion dollar investment what’s the trillion dollar problem it is solving?

I finally think I have an answer to that. It’s to eliminate the need to pay another person ever again. The trillion dollar problem it’s solving is Payroll.

neoliberalbrainrot Oct 5

Production has become uncoupled from demand because of shareholder primacy. As long as profits rise quarter over quarter, it doesn’t matter what purpose the product serves or whether anyone wants it. This is bolstered by widely spread beliefs about how AI will definitely solve specific problems even if that is not currently apparent.

“Programmers are using it successfully!” (Not really, actually)

But are they? The usefulness of AI in boosting productivity has come into question. Microsoft wants programmers to use Azure and CoPilot to write all new code, with Nvidia’s Jensen Huang says kids shouldn’t learn to code — they should leave it up to AI.

But computer languages are constantly updated, and programmers are finding that CoPilot is fed mostly with older code that does not work anymore, and cannot be relied upon as needs get updated, and often introduces more bugs into the code. Good programmers find not using AI coders to work so much better.

The benefits of AI in the workplace is way overstated

Programming was supposed to be the “go to” example of how AI can improve productivity in the workplace, and then it was supposed to move on to other careers, but if programmers are deciding AI tools are worthless for their work then that kills AI’s best example.

One economist is saying it’s closer to 5% of the jobs.

All of the buzz around AI this year smelled like desperation to me, the big investors are seriously worried about their big investments will not pay off anytime soon.

The religious conviction of the AI Bros

Sam Altman in “god mode” in a previously quoted can almost be taken literally. It seems the AI Bros are part of some billionaire cult praising a god that will justify their trillion dollar investment. I don’t know of anyone who believes AGI is close. And yet they are making billion dollar bets on it.

This idea of tech turning into a religion keeps coming up. For example, Microsoft is a Fucking Cult!! according to researcher Ed Zitron’s latest post. Microsoft is acting exactly like a religious cult.

CEO Satya Nadella is pushing this cult idea of “Growth Mindset” which sounds like the same bullshit we heard from cultist in the 2000s with “The Secret” or “Law of Attraction” pseudoscience.

Nadella talks EXACTLY like a cult leader, dismissing any opposition with “Oh that’s Fixed Thinking” I see the same mentality in the AI Bros who really think AI will be smarter than humans within a year like it’s the second coming or something. Religious cults have taken over the tech sector!!

Even worse was this series of utopian prophecies as outlined by Matteo Wong writing at The Atlantic:

Over the past few months, some of the most prominent people in AI have fashioned themselves as modern messiahs and their products as deities. Top executives and respected researchers at the world’s biggest tech companies, including a recent Nobel laureate, are all at once insisting that superintelligent software is just around the corner, going so far as to provide timelines: They will build it within six years, or four years, or maybe just two.

Although AI executives commonly speak of the coming AGI revolution—referring to artificial “general” intelligence that rivals or exceeds human capability—they notably have all at this moment coalesced around real, albeit loose, deadlines. Many of their prophecies also have an undeniable utopian slant. First, Demis Hassabis, the head of Google DeepMind, repeated in August his suggestion from earlier this year that AGI could arrive by 2030, adding that “we could cure most diseases within the next decade or two.” A month later, even Meta’s more typically grounded chief AI scientist, Yann LeCun, said he expected powerful and all-knowing AI assistants within years, or perhaps a decade. Then the CEO of OpenAI, Sam Altman, wrote a blog post stating that “it is possible that we will have superintelligence in a few thousand days,” which would in turn make such dreams as “fixing the climate” and “establishing a space colony” reality. Not to be outdone, Dario Amodei, the chief executive of the rival AI start-up Anthropic, wrote in a sprawling self-published essay last week that such ultra-powerful AI “could come as early as 2026.” He predicts that the technology will end disease and poverty and bring about “a renaissance of liberal democracy and human rights,” and that “many will be literally moved to tears” as they behold these accomplishments. The tech, he writes, is “a thing of transcendent beauty.”

https://www.theatlantic.com/technology/archive/2024/10/agi-predictions/680280/

From what I can tell this is all based on faith. They are based on the idea that there is a “Moore’s Law” regarding the growth capability of LLM sophistication, but no one has been able to prove that this is true. In fact there is more evidence that LLM capabilities have basically flatlined, that ALL the advancements in AI this years are not proof of smarter LLMs, but just better programming by the developers. This seems to be the case of GPT-o1 the “reasoning” LLM which doesn’t reason at all but uses multiple redundant chat inquiries to make sure it is answering in the best way possible which of course costs much more energy to use.

To be even more cynical, these predictions of AGI very soon are promises to investors to raise money, and if AGI does not materialize soon, AI investments will disappear.

AI Hype is nothing but marketing

I guarantee when the first AI company announces that they achieved “AGI” it will be 100% marketing, and 100% bullshit. We absolutely need a way to test any claims, or some AI company is going to declare it way too early, and investors are going to be pissed!

The only tech person trustworthy enough seems to be Linus Torvalds the creator of Linux:

And here’s a nice video to end on: